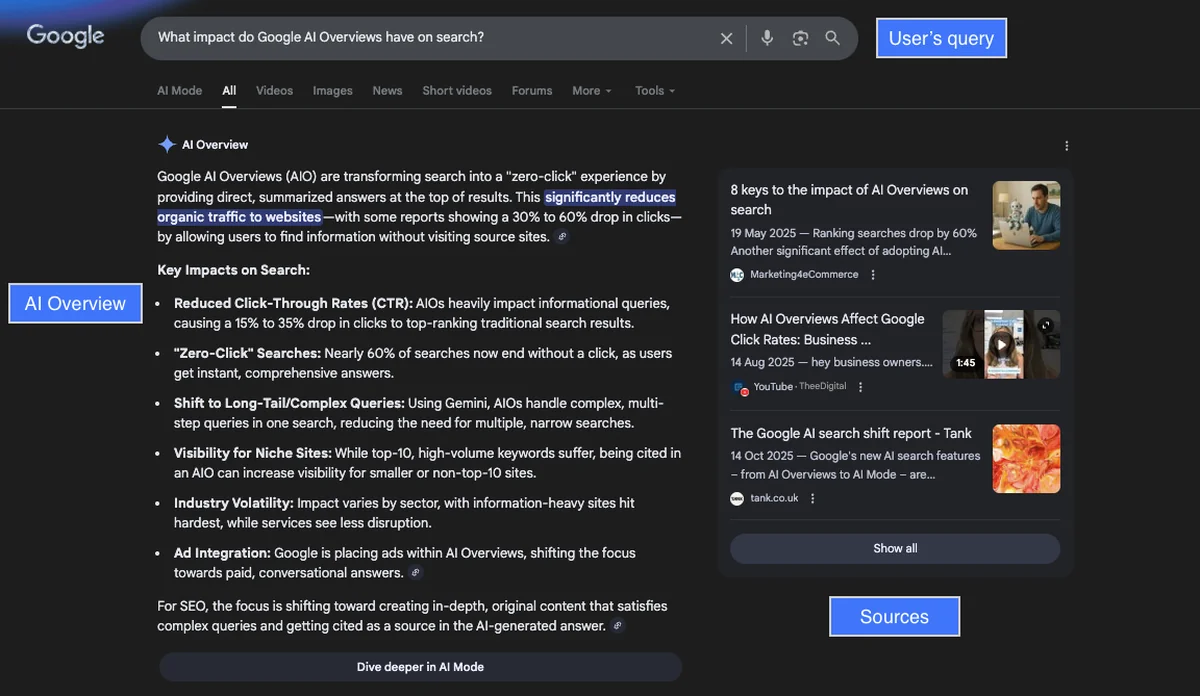

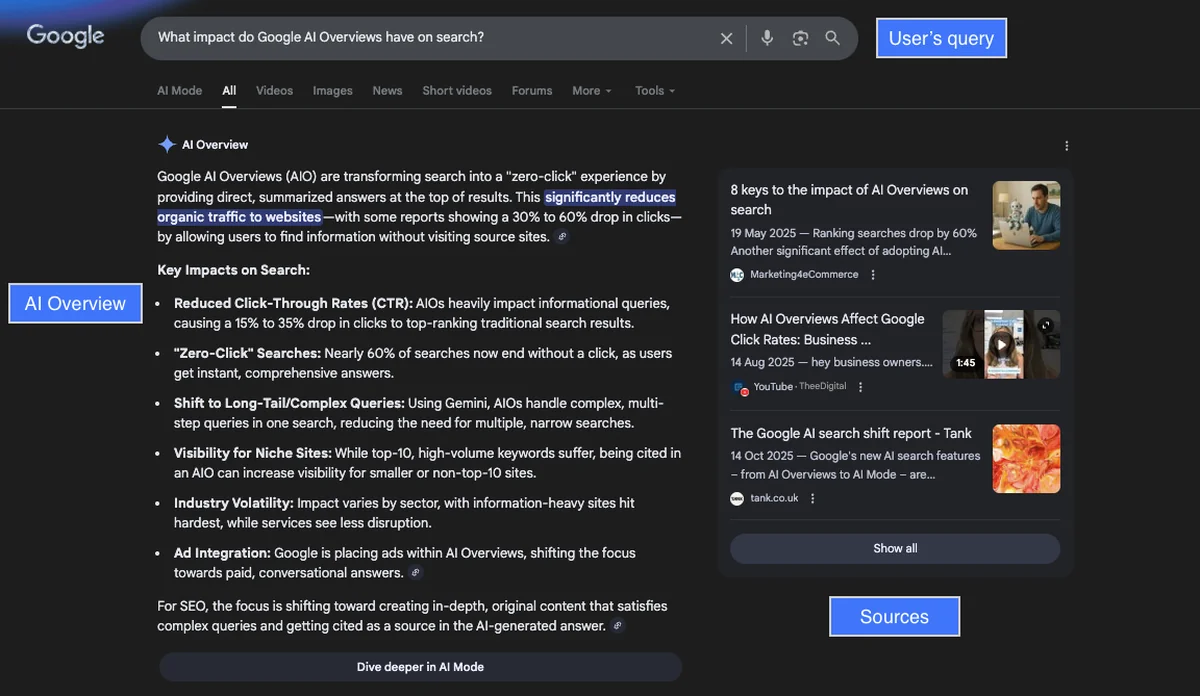

Since their initial launch in the United States in 2024 and subsequent international expansion, AI Overviews are redefining how visibility works in Google Search. Instead of a results page dominated by blue links, users increasingly encounter AI-generated summaries that answer questions directly, supported by cited sources and relevant links.

As a result, terms like AI Overviews SEO and AI search visibility have become common in SEO discussions. Yet much of the conversation is driven by assumptions rather than a clear understanding of how these systems actually function. This article takes a deliberately restrained approach: no shortcuts or “hacks,” but a realistic explanation of what influences visibility, and what tends to be overstated.

What are Google AI Overviews?

Google explains that AI Overviews provide an AI-generated snapshot with key information and links that help users dig deeper. Essentially, they function as a new search layer designed to answer a user’s question directly within the search results, while still pointing to relevant sources for further exploration. As a structural part of the SERP experience, visibility is no longer only about ranking, but also about whether your content is selected and cited within that summary.

The most important distinction is that AI Overviews are not a new ranking system. They function as a presentation layer that uses generative AI while remaining grounded in Google Search. In Google’s own explainer, How AI Overviews in Search work the fundamentals are described as AI-generated summaries supported by links that allow users to explore the web in more depth.

What impact do AI Overviews have on search behavior?

Search results have not been “just links” for a long time. Over the years, they have evolved into a collection of interfaces and features competing for user attention. AI Overviews add another layer to this environment, but one with a particularly strong impact on how users interact now with search results.

What makes AI Overviews more disruptive than earlier SERP features is their ability to satisfy user intent before any click occurs. When an AI-generated summary is shown, users can often get a clear, complete answer directly on the results page. As a result, many searches end earlier, without the need to visit a website.

At the same time, Google presents AI Overviews as a way to surface links in multiple places and expose users to a broader range of sources. In practice, both dynamics can exist at once, depending on the query and intent.

In general, AI Overviews influence search behavior in a few key ways:

Lower click-through rates: Informational queries often see a decline in clicks, as users get their answers directly from the AI summary and end their search before clicking.

More zero-click searches: A growing share of searches end without any click when the overview fully resolves the intent.

Earlier intent resolution: Users make decisions faster, sometimes without scrolling further down the SERP.

Shift toward complex queries: AI Overviews are especially effective for longer, multi-step or explanatory searches.

New visibility patterns: Pages cited in AI Overviews may gain exposure even if they do not rank in the top organic positions.

Trust and attention: AI Overviews capture most user attention and are often perceived as an authoritative filter, even though trust in their accuracy is not absolute. Users tend to scan the AI summary first and only click through when they want more detail or clarification, leading to more selective clicking and fewer overall visits.

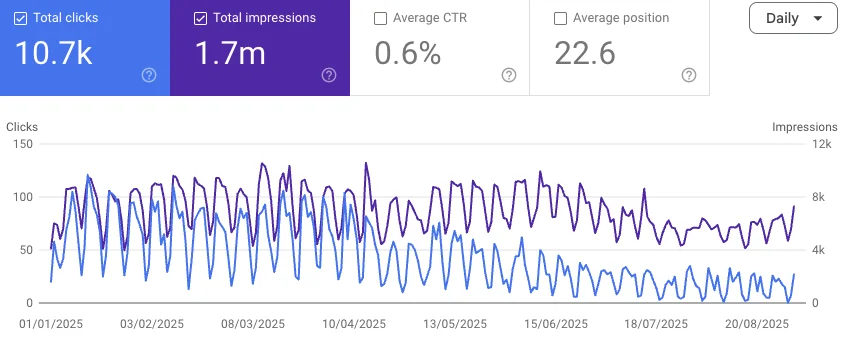

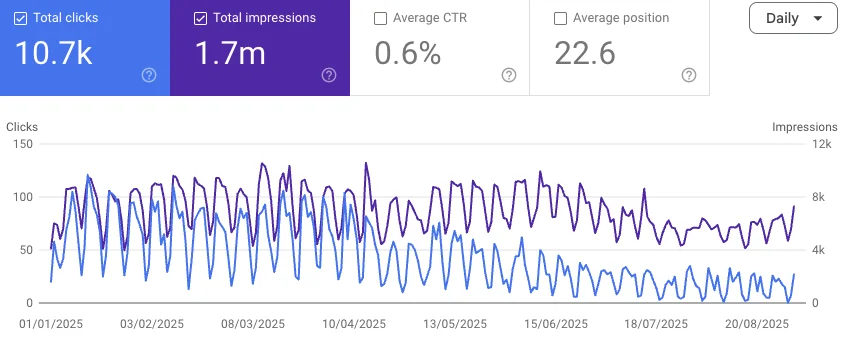

In the following chart, we can clearly observe this shift in user behavior. While total impressions remain high and relatively stable, the number of clicks gradually declines over the analyzed period. This results in a low average CTR, even though overall visibility in Search does not appear to be declining at the same rate.

This pattern is consistent with the impact of AI Overviews: content continues to appear in search results and be exposed to users, but a significant portion of user intent is resolved directly on the SERP. As a result, searches increasingly reach their conclusion without a website visit, reducing organic traffic without necessarily indicating a loss of relevance or coverage.

The overall impact is not uniform, though. For some queries, AI Overviews reduce traffic by resolving intent early. For others, they create new visibility opportunities and can drive more qualified visits when a source is selected and cited.

How Google AI Overviews decide what to show

A useful way to understand AI Overviews is to separate three layers:

Trigger: does an AI Overview appear for this query at all?

Inclusion: is your page selected as a supporting link/citation?

Outcome: what happens next (clicks, engagement, brand impact)?

Google notes that AI Overviews (and related AI Search experiences) may use query fan-out, meaning the system can run multiple related searches across subtopics and variations to assemble a response and identify supporting pages.

This matters because it helps explain why inclusion isn’t the same as “ranking #1.” Selection can happen at passage level, across multiple sub-queries, with an emphasis on corroboration and confidence.

Signals that influence AI Overview visibility (and which don’t)

Google is explicit that there are no special “AI Overviews optimizations” required beyond what applies to Search generally. The fundamentals remain the baseline: pages need to be indexable, discoverable, readable, and reliable.

In practice, the signals that matter tend to work as a chain:

Eligibility: the page must be crawlable, indexed and eligible to appear with a snippet.

Discoverability: strong internal linking increases the chance that relevant pages are retrieved during fan-out and selection.

Citeability: content that directly addresses sub-questions and supports specific claims with information from official sources is more suitable as evidence than generic repetition.

Clarity: key information should be accessible in plain text (not trapped behind heavy UI or purely visual layers).

Structured data: helpful when it matches visible content, but not decisive on its own. It helps clarify meaning and structure for search engines, supporting comprehension rather than acting as a ranking or selection trigger.

Multiplatform presence: Independent studies show that AI Overviews frequently cite a relatively small group of highly authoritative platforms, such as Wikipedia, YouTube, and Reddit, while many other sources receive little or no exposure. Being present on these types of platforms helps increase the likelihood that your brand or content is surfaced, referenced, or indirectly reinforced within AI-generated summaries, even when users do not click through to a website.

What tends to be overstated is the idea of a separate AI-layer to game. Similarly, E-E-A-T is best treated as an editorial quality lens rather than a direct scoring system you “optimize for.” And ranking #1 does not guarantee citation—because inclusion is often about the best supporting passage, not the highest-positioned URL.

How AI Overview visibility can be measured

AI Overviews do not introduce new metrics, but they fundamentally change how existing metrics should be interpreted. Because AI Overviews are reported within standard Web search data in Google Search Console, they are measured using the same definitions for clicks, impressions, and position as traditional organic results. This creates a gap between what users experience in the search results and what teams can directly observe in reporting tools.

In Search Console, a click is only recorded when a user leaves Google and visits an external site. Interaction within an AI Overview itself, reading the summary, scanning cited sources, or forming an opinion, does not register as a click. Impressions are counted only when a result is actually visible on screen. For AI Overviews, this often requires the overview to be expanded or scrolled into view. As a result, impressions tend to underrepresent how often content is effectively seen or used as context by users.

Position introduces another layer of complexity. When an AI Overview appears, the entire overview is assigned a single position, and all cited links within it share that position. A reported average position of “1” may therefore indicate that the AI Overview block was shown at the top of the page, not that an individual page ranked first in the classic SEO sense. This makes position a signal of SERP structure rather than a direct indicator of competitive ranking.

These mechanics explain why familiar performance indicators can behave in unexpected ways. Click-through rates may decline even when a page is consistently cited in AI Overviews. Impressions may appear low relative to perceived visibility. And changes in average position may reflect the presence or absence of an AI feature rather than shifts in organic ranking strength.

Because of this, performance analysis needs to move away from single metrics and toward a layered interpretation:

The first layer concerns triggering: which query clusters tend to show AI Overviews at all.

The second layer is inclusion: whether a domain is selected as a supporting source, and how stable that selection is over time.

The third layer focuses on outcomes beyond the SERP, such as engagement, conversion behavior, and overall traffic quality, which can only be evaluated by combining Search Console data with analytics data.

A real world example

A practical scenario helps clarify this shift:

Consider an informational page that begins appearing regularly as a cited source in AI Overviews for a group of high-volume queries.

After AI Overviews are introduced, Search Console shows a decline in clicks and CTR, while average position trends toward the top of the results.

At the same time, analytics data shows that the organic sessions that do occur spend more time on the page and convert at a higher rate.

In this situation, the drop in clicks does not indicate a loss of relevance or visibility. Instead, it reflects that many users are satisfied earlier in the journey, while those who do click arrive with stronger intent.

AI Overviews do not make performance impossible to measure, but they do require a different mindset. Rankings, impressions, and CTR remain useful as diagnostic signals that describe changes in the search environment. However, they are no longer sufficient on their own. The more meaningful indicators of success increasingly lie in whether content is consistently selected as a trusted source and whether the traffic that follows contributes to meaningful outcomes.

Do’s & Don’ts when measuring AI Overview visibility

AI Overviews change not only how search results are displayed, but also how performance data should be interpreted. Because they are reported within standard Search Console metrics, teams need to be deliberate in how they analyze and explain results.

Do’s

Do analyze query clusters instead of individual keywords: Focus on groups of queries that consistently trigger AI Overviews. Single-keyword fluctuations are too volatile to support reliable conclusions.

Do track inclusion over time: Monitor whether your domain is cited as a supporting source and how stable that inclusion is across weeks or months. Consistency matters more than one-off appearances.

Do combine Search Console with analytics data: Use Search Console to understand exposure in search, and analytics tools to evaluate what happens after the click: engagement, conversions, and overall traffic quality.

Do treat position and CTR as diagnostic signals: Position and CTR help indicate changes in the SERP environment, but they should not be treated as direct success or failure metrics in AI-driven results.

Do expect fewer but higher-intent clicks: AI Overviews often filter out low-intent traffic. A decline in clicks can coincide with stronger downstream performance and more qualified sessions.

Don’ts

Don’t assume a drop in clicks means a loss of visibility: Users may read and absorb information directly from the AI Overview without clicking. This cognitive exposure is not fully captured in Search Console.

Don’t interpret “position 1” as a traditional ranking win: In AI Overviews, position often reflects the placement of the AI block itself, not a classic organic ranking for an individual page.

Don’t expect feature-level reporting in Search Console: There is no dedicated AI Overview filter or report. This is a deliberate design choice, not a temporary reporting gap.

Don’t draw conclusions from short-term fluctuations: AI Overviews are dynamic. Day-to-day or week-to-week changes should be interpreted cautiously and evaluated over longer time horizons.

What AI Overviews mean for SEO and GEO strategies

AI Overviews reinforce a shift that has been building for years: more evaluation now happens before the click, and sometimes without a click at all. Users may form an opinion about a brand, product, or solution before visiting a website, or without visiting one entirely.

Visibility in search is no longer only about ranking positions. It increasingly depends on whether content is clear, authoritative, and structured in a way that makes it suitable to be selected and cited within AI-generated summaries.

For SEO teams, this does not invalidate existing fundamentals. Crawlability, internal linking, clear text content, appropriate media, page experience, and accurate structured data remain essential. What changes is how success should be interpreted. Ranking alone becomes a narrow metric in feature-dominated SERPs, and CTR becomes less reliable as a primary indicator of visibility or impact.

This is where Generative Engine Optimization (GEO) becomes relevant as a framework, without turning it into hype. For Google AI Overviews specifically, inclusion is still rooted in Search systems and index logic rather than external reputation signals alone. GEO becomes more relevant when visibility across multiple generative platforms is the goal, where broader context, off-site signals, and consistency across the web play a larger role.

For teams looking to understand this shift beyond individual features, it helps to view AI Overviews as part of a wider transformation in how discovery, evaluation, and decision-making are shaped by AI-driven search experiences.

What still works, and what needs rethinking

SEO fundamentals remain foundational. What needs rethinking is the assumption that ranking automatically equals visibility. In feature-dominated SERPs, content increasingly needs to function as evidence: discoverable, trustworthy, and clearly aligned with real user questions.

Because decision-making is now often shaped before the click—and increasingly intersects with paid surfaces as well—it also makes sense to align this shift with Google Ads strategies, particularly for query types where organic CTR is declining.